How to Develop High-Performance Deep Neural Network Object Detection/Recognition Applications for FPGA-based Edge Devices - Embedded Computing Design

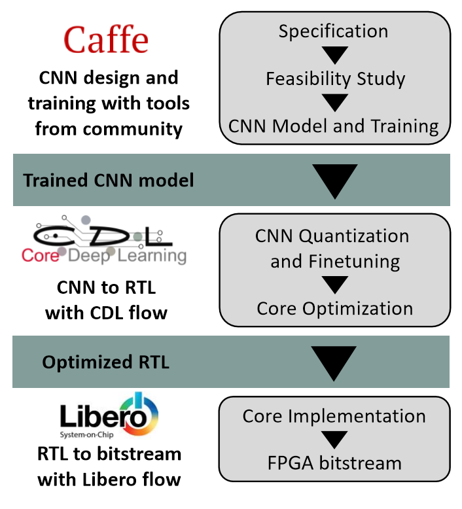

How to develop high-performance deep neural network object detection/recognition applications for FPGA-based edge devices - Blog - Company - Aldec

Intel Speeds AI Development, Deployment and Performance with New Class of AI Hardware from Cloud to Edge | Business Wire

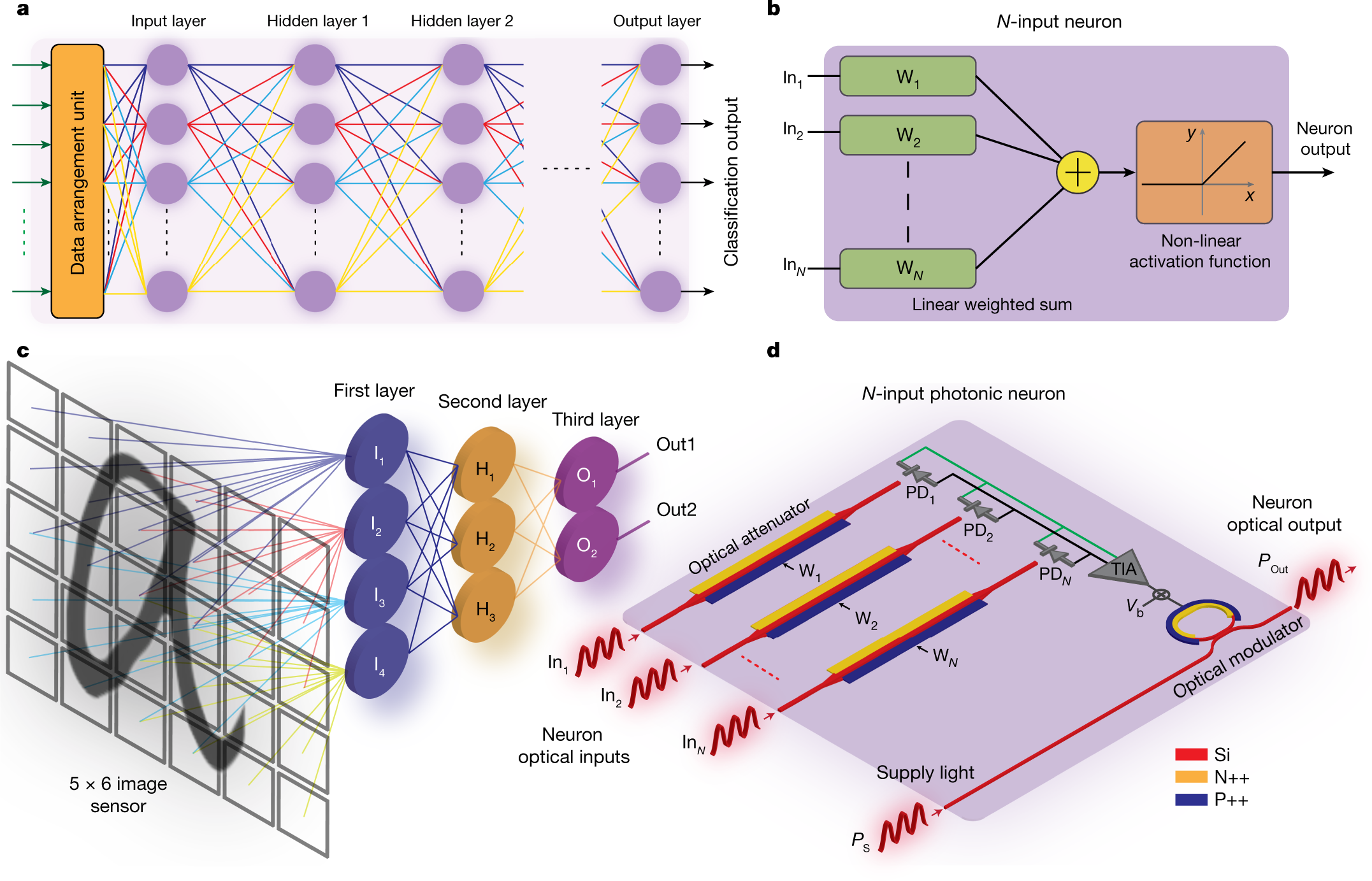

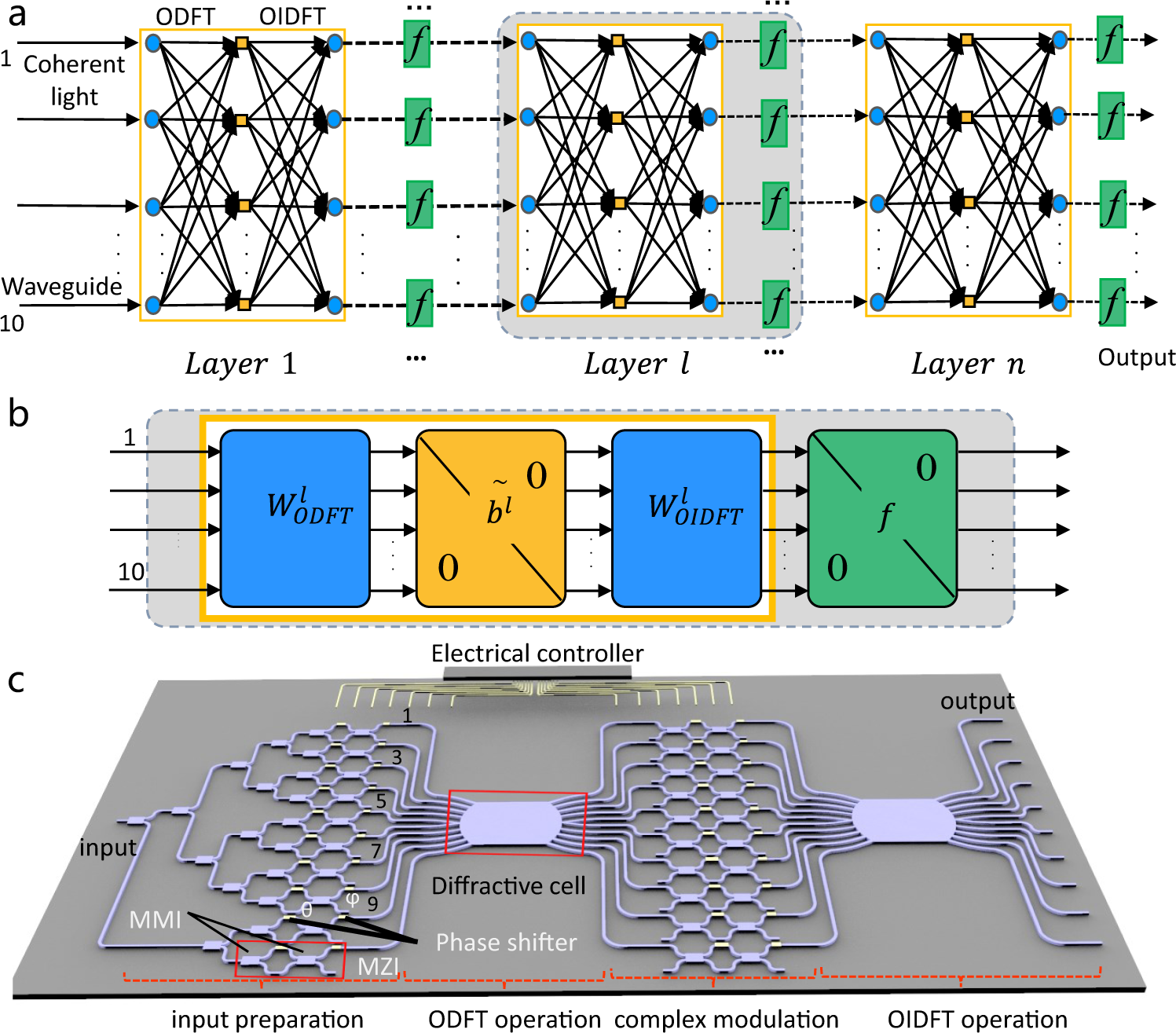

Space-efficient optical computing with an integrated chip diffractive neural network | Nature Communications